Chapter 3

13 minutes

Right-size your lead scoring

Business maturity benchmarks will help you find the right type of lead scoring system for your company — and estimate the support and resources you'll need for it.

The trick is to find the sweet spot where the system isn't holding you back as you scale, but you're not burning energy building and maintaining something more complex than you need.

As a company grows and scales, it's more likely to have:

- Higher lead volume

- Multiple product offerings

- Multiple go-to-market motions (e.g., adding a sales-assisted track over an existing product-led track)

- More sales territories

- Different types of sales teams

So it likely needs more from its lead qualification system:

- More data points feeding a score

- More types of data feeding a score (e.g., behavioral and firmographic)

- Multiple lead scores for different teams and business areas

- More technology to support (e.g., first-party data tools like Segment, lead-to-account matching and routing platforms like LeanData, predictive scoring like MadKudu)

- More people to orchestrate lead scoring (e.g., ops, marketing, or data/analyst teams)

It's all about the quantity of leads you're seeing and the capacity you have to work them. Lead scoring may not be the biggest priority when you have enough people to cover what's coming in.

But as soon as you start to grow, use complicated motions, and have different types of leads, you need to start to optimize your lead scoring.

It's about prioritizing your time, and sending the right types of leads to the right salespeople to work them. Scoring helps improve productivity within your team's current capacity.

Let's illustrate a typical journey of a scaling SaaS company with the story of a hypothetical startup. We'll call it Sassafras, and they make a developer tool. A couple of years in, Sassafras have hired two full-time sales reps, Ami and Dot. They're responsible for qualification and manually research leads. They also quickly filter out individuals with Gmail addresses, but in general, they're willing to talk to most of the people who request a demo or sign up for the product.

Meet Ami and Dot

Meet Ami and DotAt first, the sales duo use a spreadsheet to assign leads in a manual round robin. When Sassafras implements Salesforce to keep up with growing lead volume, Ami and Dot start using Salesforce's Process Builder to split leads between them.

Ami and Dot use spreadsheets and then Salesforce to manage leads

Ami and Dot use spreadsheets and then Salesforce to manage leads

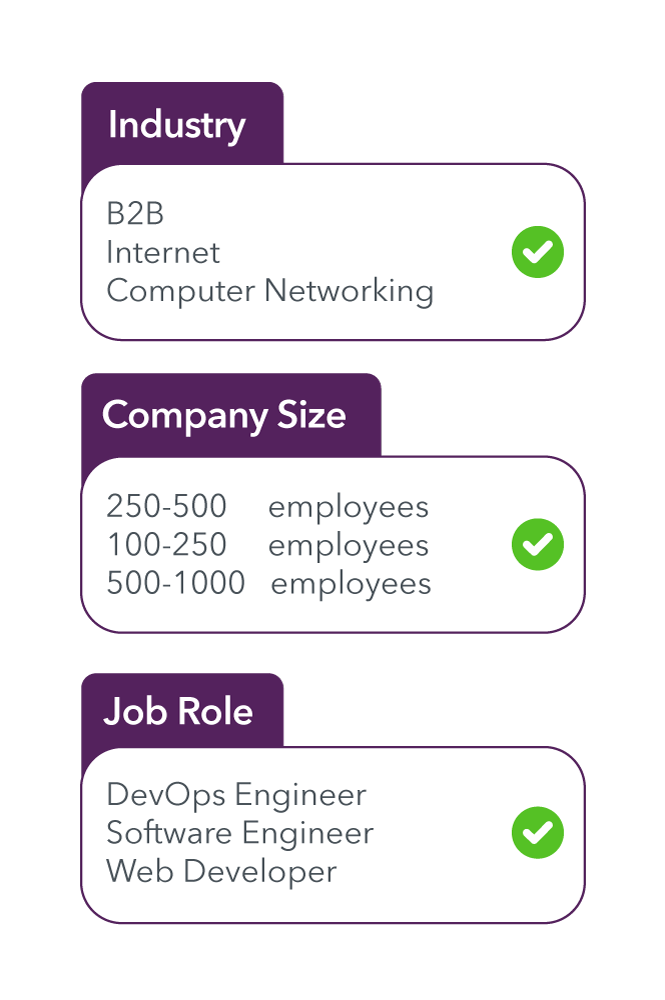

As Sassafras grows, their ICP definition starts getting crisper. Ami and Dot now have a great intuition about good prospects, and they make a shortlist of three firmographic and demographic characteristics they think are the best "tells" — industry, company size, and job role.

Ami and Dot's rules of thumb for what a good lead looks like

Ami and Dot's rules of thumb for what a good lead looks like

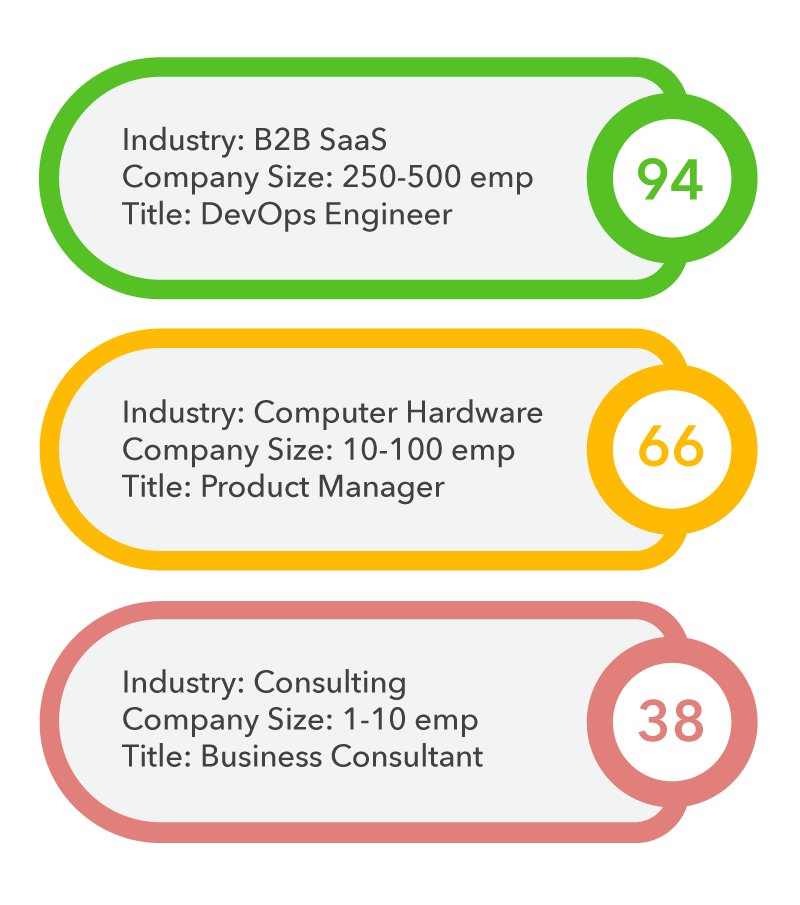

The Head of Demand Generation, Kai, uses their shortlist to make a point-based lead score. It assigns point values to each of the three characteristics, and the process is created with the built-in tools in their marketing automation platform (MAP), HubSpot. New leads that come in automatically get a score, and now, Ami and Dot have an easier time prioritizing all the prospects piling up on their virtual desks.

Sample lead score: Ami and Dot focus on leads with higher scores and don't go below 60.

Sample lead score: Ami and Dot focus on leads with higher scores and don't go below 60.

A few fundraises and TechCrunch spotlights later, Sassafras is the hottest SaaS company around. Their little developer tool has grown into a platform with multiple products, and they've hired more sales and customer success teammates. They define geographic sales territories, and as Sassafras goes upmarket to sell to larger companies, Ami and Dot split the sales team into SMB, Midmarket, and enterprise.

Ami and Dot's growing sales team

Ami and Dot's growing sales team

While their sales team is going after big fish, Sassafras is also offering a free trial and an affordable "individual plan" for their original tool, for developers who just want to sign up and start using it on a monthly subscription. They're actively cultivating a product-led motion, in addition to their growing sales motion.

Kai, the former head of demand gen, is now the head of RevOps, with a team of three. They've been tinkering with the point-based lead score to make it more useful for sales, adding behavioral data to detect buyer intent.

But as a whole, Sassafras is still having a tough time prioritizing the large inbound lead volume they're getting and figuring out how to close bigger enterprise deals. They need a more sophisticated lead scoring model, more tools to support it, more data sources, and more teammates to make sure everything is working smoothly in the background. And their sales team is starting to reflect that, scaling up even more.

Kai is considering switching to a predictive, machine-learning based scoring model. They're also considering creating two different lead scores: one for their product-led velocity motion, and one for their sales motion serving larger companies.

In the routing department, Sassafras switched to LeanData because they were having a hard time updating routing rules in Salesforce each time their sales team grew, divided, or changed in some way. Sometimes, Ami's velocity leads were routed to Dot's enterprise team, and vice versa, slowing down their response times. Their stack now consists of Salesforce, LeanData, HubSpot, and Clearbit for data enrichment.

That's the story of Sassafras. Sound like anyone you know?

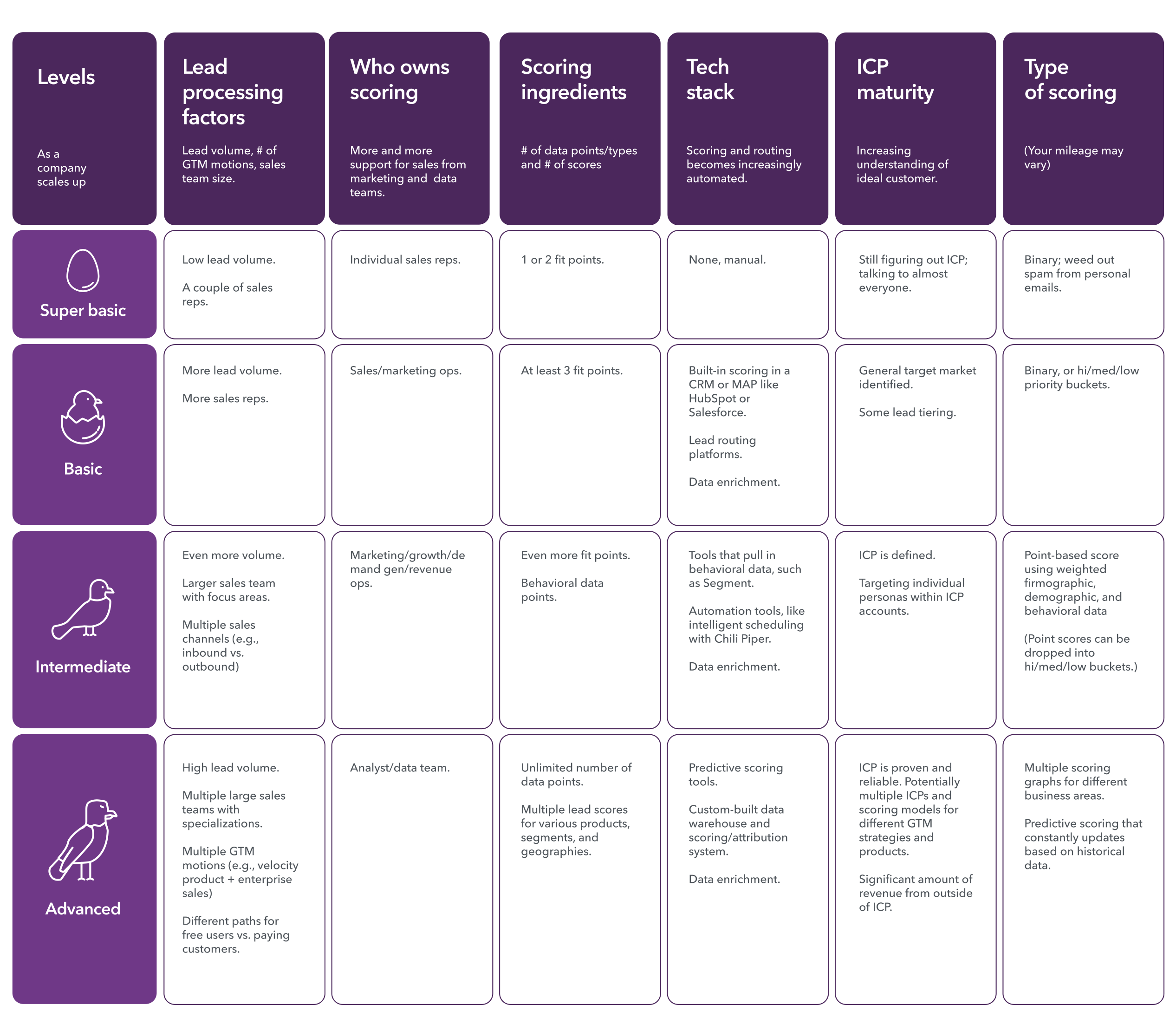

Maturity benchmarks for lead scoring systems

The following chart outlines this common trajectory, though your mileage may vary. The benchmarks are intended to help you identify a lead scoring system that best fits your company's complexity and resources. It's all about matching your tech stack to your team maturity to your go-to-market maturity to your lead volume … you get the drift.

Your journey may not be as linear as what's outlined here. These benchmarks are less about forcing your team to follow a "track," and more about placing yourself in an appropriate class so you can investigate and course-correct where things seem off. If you're very advanced in some categories, but basic in others, you may not be enjoying the full power of your investments because you're holding yourself back in other ways.

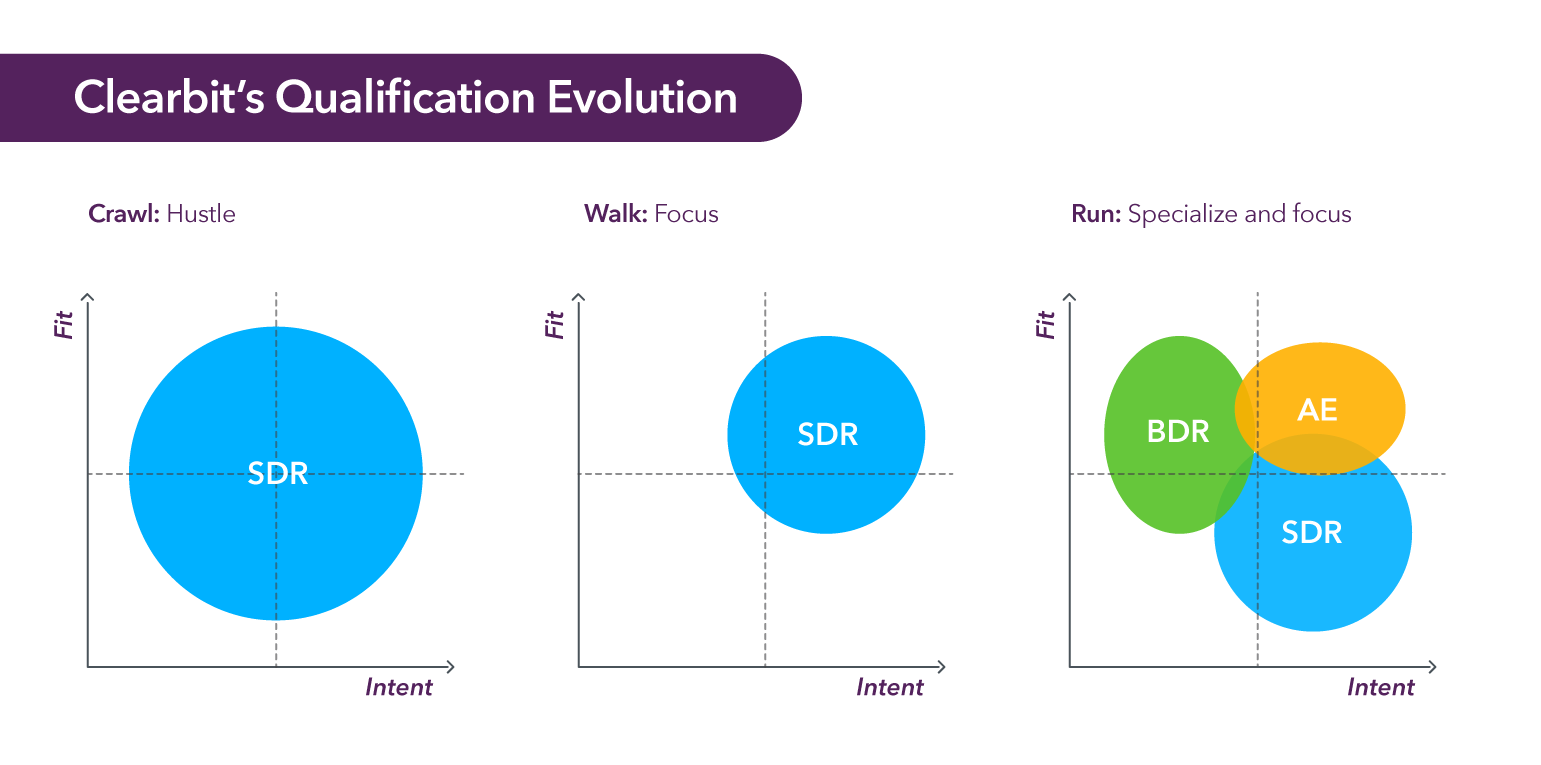

Clearbit is an example of a company that's progressed through these levels over time, though not necessarily in one nice clean line. It's a constant work in progress. We share details about our setup in Examples of lead scoring models, but here's a sneak peek to illustrate our evolution.

At the very start of our time on planet Earth, Clearbit didn't use much scoring. Sales prioritized hand raises over any other inbound leads, developing their intuition about what makes a good lead through conversations and manual research.

Eventually, we started using an A/B/F bucketing system to score leads. It was based on a few firmographic and individual data points shared by our past closed/won opportunities.

In 2020, we shifted company strategy and pared down our qualification criteria to center around one metric: Alexa Rank. This was an effort to focus on high-LTV prospects (read more in “Searching for healthier revenue”).

Recently we started opening up our qualification criteria again, and we are using MadKudu to do predictive, machine-learning scoring based on past data. We're also using an advanced custom-built model to detect purchase intent—it picks up on a lead's behaviors like interactions with our marketing material and product usage.

Despite our expansions and contractions in company strategy over time (not to mention the ever-present "we're still figuring it out" feeling), if we zoom out, we can see a crawl-run-walk progression in Clearbit's 2D qualification maturity, which also tracks with our evolving sales team complexity.

The early "crawl" stage was about the hustle: We had one core sales team of SDRs working everyone under the sun.

The later "walk" stage was about focus: We focused our SDRs' time on high-fit, high-intent leads. Anyone else was warmed up by marketing until they were ready to talk.

The current "run" stage is about specialization and focus: As we diversified our goals at Clearbit, we clarified each sales team's focus. We now send slam-dunk, high-fit, high-intent leads straight to AEs, even if they have lower intent. SDRs handle the low-fit leads that have high intent, like someone outside our typical industry making a demo request, and qualify the lead before sending them to a specialized, expensive AE. BDRs go after the high-fit leads who don't yet have intent, educating and warming them up until they're ready to talk.

We transitioned from crawl to walk to run quite quickly, because we'd already set up most of the tools that help with the complexity of scoring and routing. They let us easily send different types of leads to multiple sales groups and change our rules easily. Jon Delich, Head of RevOps at Clearbit, says, "It's been a fast evolution to that specialization and focus stage. It can go quickly, assuming you have the right tech stack in place and can implement those changes, which Clearbit did."

What about you? When it comes to lead scoring, if you were to rate your own company as basic, medium, or advanced for each category in the chart, putting an X over a box in each column, would you see a straight horizontal line? (If you're all over the map, it's okay—we're in no place to judge.)

Let's dive a little deeper to explain three types of lead scoring mentioned in the chart: binary, point-based, and predictive.

Three types of lead scoring based on ICP maturity

MadKudu's maturity framework for three stages of lead scoring suggests what type of lead score to use based on a company's understanding of its ICP.

Stage 1: I'm figuring out my ICP.

→ Use a binary lead score ("Lambs" versus "Spam")

At the earliest stage, a company's sales team is still figuring out their ICP and learning how to sell, what to sell, and who to sell it to. According to MadKudu, benchmarks for companies at this level are:

- up to five SDRs

- fifty employees

- around 100 qualified leads per day — or $1M ARR.

At this point, weeding out spam is the main goal of lead scoring — such as filtering out consumers and vSMBs with no budget.

But the remaining leads, which MadKudu calls "lambs," are fair game, helping sales reps develop discernment about who to sell to. "Marketing needs to provide [sales] hunters with tasty lambs," they write, adding that it's okay to let sales wade through a high volume and deal with some level of uncertainty, because it's too early to tell which lambs are more tender than others. Filtering too much could restrict the learning process. That said, you don't want to waste reps' time with obvious spam.

The recommended type of lead scoring at this stage is binary — a lead is either a "yes" or a "no."

Very basic fit points drive this:

- A "no," or spam, is any personal email address—like @gmail—that might indicate a consumer or a vSMB with no budget.

- A "yes," or a lamb, is a lead whose title or team indicates that they have budget and buying power.

The sales reps cut their teeth on as many lambs as possible until, ideally, it's easy to identify an ICP.

Stage 2: I know my ICP and want to generate repeatable revenue with it.

→Use a simple point-based lead score ("If it looks like a duck…")

In this stage, a company knows their ICP and wants to sell to this target market in a predictable way.

Use a point-based lead score, where leads that look more like your ICP are scored higher.

A point-based score is based on a handful of data points. For example:

- (A) Persona/role = Product Managers

- (B) Companies that have raised venture capital

- (C) Companies based in the United States

- (D) Companies that sell software

- (E) Companies with 50–200 employees

You can assign weight to the different attributes. (We also like to assign negative values to attributes that don't fit the ICP.)

Point-based ICP scoring will work for quite a while, until your company bumps up to the next level of complexity.

Stage 3: I want 80% of revenue to come from 20% of my leads.

→Use advanced predictive lead scoring ("Be like a Kudu and adapt to your surroundings")

At this stage, your lead scoring model needs to handle a ton of complexity, such as:

- Going upmarket and entering new verticals and geographies

- Upselling to existing customers

- A highly tiered sales and CS team

- Multiple product lines

- Selling to companies where product users and buyers are different people

- Multiple GTM motions, like product-led tracks and enterprise tracks

Lead scoring may even start to split off from your ICP. In the previous stage, point-based lead scoring was all about how much a lead looks like the ICP, and only the ICP. If it doesn't look like the ICP enough, there's no sales convo at all.

But maybe your company becomes so diversified and complex that you can, at the same time, have an ICP (like aspirational enterprise logos, for example), while still making a lot of revenue from people that look nothing like it (such as many SMBs in many low-priority industries). For some companies, this non-ICP revenue is a large slice or even majority of their revenue. If they keep using the Stage 2 "ICPs only" scoring, they'll jettison everyone who doesn't look aspirational, and they won't meet their growth goals.

If you find yourself in this situation, this doesn't mean your ICP is wrong, it just means your lead scoring model needs to change. It needs more nuance so that it's able to simultaneously score ICPs and let other high-revenue-potential groups through the gate. The new objective is to generate 80% of your revenue from 20% of your leads, across the business. A predictive scoring model is more inclusive than the previous approach, and can look at revenue potential based on past track records for a variety of lead types, products, and markets. It ingests data from a complex environment and produces a simple score.

Ideally, a predictive scoring model can:

- Produce multiple lead scores for different lines of business, sales tiers, regions, or GTM motions.

- Score at both the individual lead level and the account level to differentiate between MQLs and MQAs. (Buyers and users may be different people, and it's difficult to map behaviors and fit across users and accounts to a simple point-based lead score model.)

- Incorporate behavioral data from the marketing funnel, sales cycle, and the product, plus as many types of firmographic and demographic data needed.

Predictive models use machine learning to take in the most recent fit and behavioral data from recent deals, product usage, and more. It evolves as your product evolves. The model needs to be adaptive and fast, giving you simple guidance about how to handle a lead or account, no matter how complex the inputs are.

Next, let's look at some examples of real-life scoring models at the different complexity levels we've outlined.