Chapter 6

9 minutes

The human in the lead score

Lead scoring is something we're always iterating on at Clearbit, and that's how it's supposed to be. We haven't figured it all out yet, and it's with a Cheesecake Factory-sized slice of humility that we share our own journey.

We talked to Julie Beynon, Clearbit's Head of Analytics and Colin White, our Head of Demand Generation about the challenges of designing our lead scoring and attribution model. The most difficult part, they say, isn't the implementation.

"The hard part is getting sales and marketing alignment, getting everyone to believe in the model, and getting people to agree on definitions," says Julie. "Definitions are overlooked as the most difficult areas of lead scoring — what's a good lead, and who do we want to go after? We're always grappling with this. The technical limitations of lead scoring aren't the same as they used to be because there are a lot of tools that solve this problem today — and yet marketers are still struggling with lead qualification. This points to alignment and definitions as the big challenges."

Colin agrees: "Definitions are the biggest source of complexity. We have a bunch of different use cases and lead types, and they all have different criteria for what's high quality."

Aligning the marketing team with the sales team is an essential ingredient in building effective qualification systems. As Julie puts it, "You can't eliminate the human from the lead scoring process. Even with our advanced models, even with MadKudu, our sales leaders and even our product leaders are the gatekeepers for leads and how they're qualified."

Validate your model with sales

Julie tells the story of when she, Colin, and a few other growth marketers brought a carefully generated lead list to Cliff Marg, a longtime Clearbit sales lead and former AE. We'd just started a new outbound motion, and it was marketing's job to provide high-quality lists. This particular one was the crème de la crème output of our latest model, full of ICPs that used the right technology. Julie and Colin sat on a Zoom call with Cliff, who shared his screen as he pulled up the website of each lovingly chosen company. Within five seconds he could tell whether it was a fit, and picked through the list: no, no, yes, no, no, no.

It was fascinating to watch. "Part of lead scoring is data-driven, and part of it just isn't," says Julie. "There are some things you just need a person to look at. There's no way we can filter for that, and I think it's one of the things that people get stuck in. They want to take the human completely out of the lead qualification process, and that's a mistake."

Cliff talked the marketers through the reasons why he'd disqualify a prospect. For example, maybe a company looked like a fit in terms of size and industry but dealt with very low volume — and the typical Clearbit use cases that help businesses scale processes wouldn't fit. He also used Clearbit's enrichment lookup tool to quickly pull up a company's profile, check out its firmographic attributes, and show Julie and Colin which data points he was using to qualify (e.g., has HubSpot, is B2B, is in the US).

Some of the characteristics Cliff was looking for in a lead were ones that we couldn't translate into data points for our model. But others were, and Julie and Colin incorporated those into list building for the second round.

"We involved Cliff too late the first time, but now we always try to include sales from the start," says Julie. "The data-based leads we were sending them didn't align with what they sent us back, and we didn't understand why until we sat down and watched Cliff qualify. The biggest takeaway from that day is that we can't forget the importance of integrating sales at every stage. Every decision, every time. Sales needs to be there from the start and there needs to be a constant feedback loop. If scoring is only done by the marketing team, it's never going to work."

We try to cultivate relationships and regular conversations between marketing and sales managers and AEs. And lead scoring is never a one-and-done project. For example, we just made a change to our scoring model in MadKudu, and we didn't put it into production until all four of Clearbit's sales leaders approved it. We gave them a list of 1,000 leads to spot check, comparing new and old ones to confirm that the new model was higher quality. "For sales, it's less of a black box because they can see individual leads and the improvement over time," says Julie. "What makes a good lead is not truly marketing's decision. We may be doing all the implementation and tracking for lead scoring, but ultimately we're serving sales with leads, and the more involved they are in the process, the more we both succeed."

Marketing monitors DQ reasons that reps enter into Salesforce upon disqualifying a lead. Then we apply those insights on lead and company profiles, plus feedback from sales, back into the system. A few years ago, senior members of the sales and success team were telling us that many leads from marketing were not going to turn into successful customers. "Alexa rank is the attribute to look at," they kept saying.

Sure enough, when we eventually revisited our ICP and ran regression analyses on our highest-LTV customers, Alexa rank was the strongest predictor of loyalty and usage, far outranking all the other firmographic attributes we'd been using in our ICP model for years.

Keep your sales team close, and never stop improving your scoring model together.

A lead scoring "lesson learned" at Clearbit

We've been saying that a lead scoring model needs to match a company's maturity, so we'll offer a story of when Clearbit got this a tiny bit wrong.

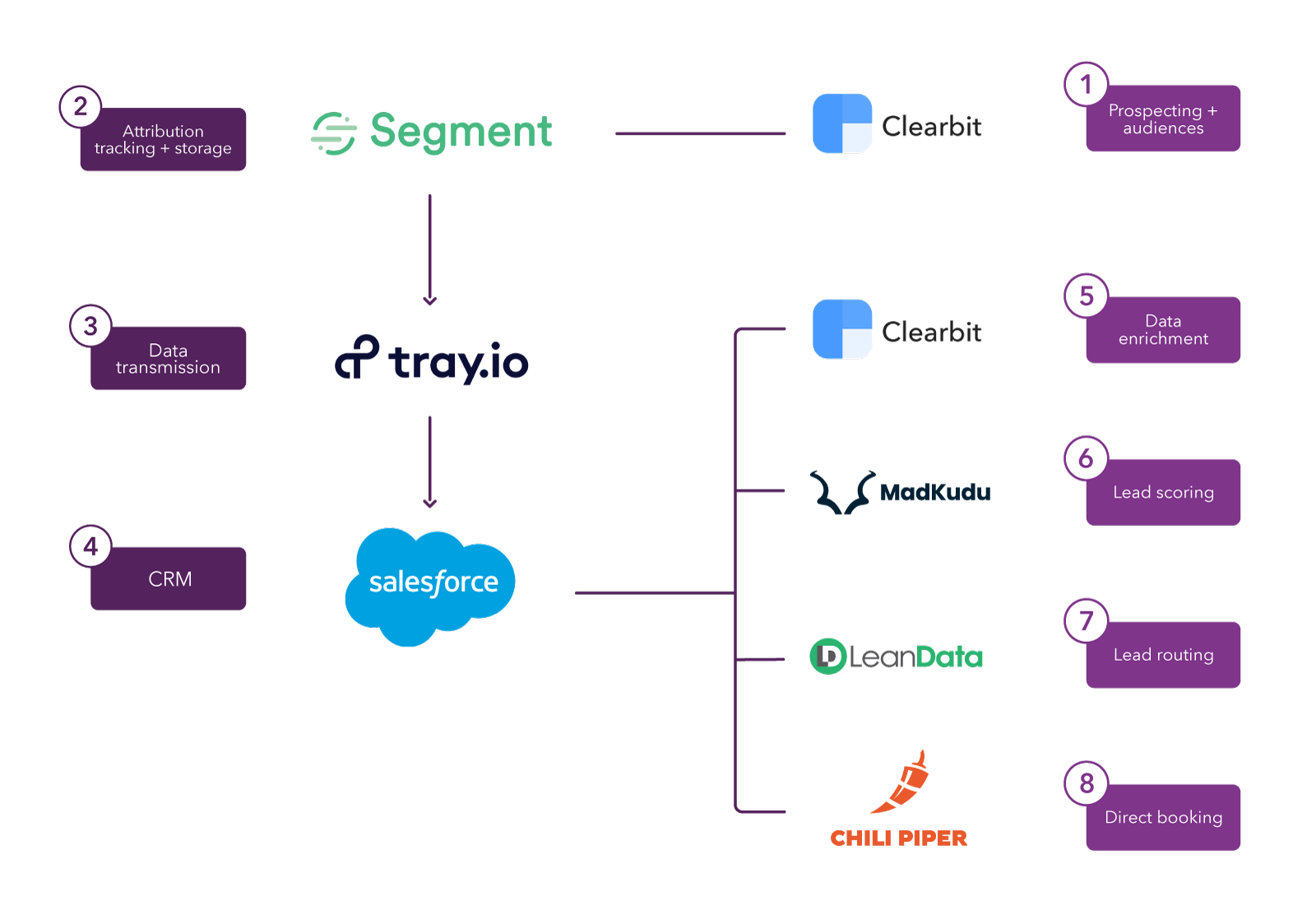

As we shared in Lead scoring in practice, Clearbit's entire scoring and routing system is home-grown. We've sewn together different tools that are all great at what they do, customizing our setup for our specific marketing use cases, when maybe HubSpot or Marketo could have given us a simple one-stop solution.

Initially, we created an advanced intent scoring model, only to discover it was too complex for our current needs. Our fancy model intended to catch good leads that visited our website but didn't make a sales contact request or sign up for a free Clearbit trial. They were flying under the radar, we thought, and we could scoop them up and send them to an outbound sales team to reach out.

The model captured a lead's interactions with many of our marketing channels, assigning points for reading our blog posts, signing up for webinars, downloading an eBook, etc. When they got enough points to hit a threshold, we thought of them as sales ready and sent them to the BDR team. And presto! The lead would be in the sales process without them needing to raise a hand at all. We were excited to send sales this additional stream of good leads that, we thought, had previously been slipping through our fingers.

But when we released the model into the wild, we were surprised to find it generated only a handful of additional leads for sales in a typical week, amounting to little more than one lead per rep, per week. More importantly, the group made up a hodgepodge of leads, with different use cases and needs, which required more BDR training.

Julie says, "The model could provide an extra 1% of leads, but the training the sales team needed to handle the extra diversity wasn't worth it. They'd need to create and learn a new sales motion for someone who, say, hadn't done a contact request but had visited a lot of website pages. That's a very different conversation than the 99% of conversations they're already trained on."

The volume we were sending simply wasn't high enough to justify developing new sequences and training a whole outbound BDR team on a new set of use cases.

So, what was going on? Why were there so few leads?

Upon closer examination, nearly everyone the model scored as "100" was already being handled by sales, because they'd already done a contact request or free trial. Clearbit didn't yet have enough lead volume to make the model generate many "under the radar" ICP leads.

We realized that our marketing tended to be a bit shortsighted — focused, yes, but missing coverage along the funnel. Our efforts were mostly trying to push ICP leads into a contact request flow — meaning we weren't engaging, nurturing, and developing high-intent leads. There wasn't enough material encouraging leads to learn outside of the hand-raise stream.

The takeaway

Essentially, we'd built an intent-based model to find people as they were becoming "ripe" for the sales process, and demonstrated that we hadn't created the conditions for them to ripen and engage at all.

We shifted our strategy to develop leads across longer journeys, with offers and content for people to explore Clearbit, as well as education, nurture, and re-engagement campaigns. That way, we proactively engage high-fit prospects who have shown interest, but aren't yet at sales readiness. Our re-engagement email campaign kicks in at a lower intent score, then nurtures the lead until they hit the right intent threshold to go to a sales rep. Julie says, "The re-engagement campaign is a good first step and a way for marketing to use the scoring model before getting sales involved."

We'll grow into our advanced model, but we think it's pretty funny that we did everything the blogosphere says we're "supposed" to do in lead scoring… and it didn't quite work. Just because you have the technology, resources, and skills to do something, doesn't mean you should.

Colin explains that most early-stage companies don't need a high level of intent scoring complexity. "A lot of SaaS businesses have eBooks and webinars, but they don't take them into account in the lead score," he says. "They let those engaged leads hang out and wait until they raise their hand. In that case, scoring is pretty simple on the behavioral side; it's about whether or not they made a demo request. Then it's about the firmographic piece. Clearbit is now in a position where we have the tech stack to be able to do the fancy stuff, but a lot of companies aren't. Realistically, it's more than a young company needs."

The fact that Clearbit is a little tech-advanced for its age has been helpful, in that we can "grow into" our tech stack and transition to more complex operations more quickly than companies who are truly starting from zero. But at the same time, our experiences have shown us firsthand how a good scoring system will only live up to its full power when it matches the company's GTM maturity, staffing, and other resources.