Chapter 5

13 minutes

Sales Forecasting

Neil Ryland CRO at Peakon

In the fall of 2010, the CEO of Huddle—my boss at the time—gathered our senior team in a conference room. The sun was beating down through the windows at 8 a.m. in our Regus office in San Francisco. A couple of minutes after we sat down, our UK office peers connected via video conference. The gloom of the London weather, and the atmosphere in that conference room, soon filled our office as well. “We missed our projected revenue numbers by 40%,” our CEO said. Of course, many of us already knew this. We’d been feeling the stress of being behind our target for the last month. The question on all of our minds was, how could we get our sales forecast so wrong?

I’ve been a part of enough sales teams over the last 10 years of my career to know what it feels like to miss a target. The truth is that sales forecasting with perfect accuracy is impossible. Forecasting is by its very nature an exercise in predicting the future. It’s always a best guess. But this quarter was by far the worst. Not only had we missed the target by 40%, we’d also made a number of important business planning decisions based on hitting our number and the resulting new business. We’d opened offices in the United States, hired four senior salespeople, and invested in a large headquarters office in London. The list went on. In that meeting, we all had to own up to our forecasting mistakes and determine how we’d improve going forward.

Anyone who’s been in sales long enough will relate to this story. They’ll relate to the balance between optimism and accuracy—that is, the tendency for nearly everyone in a sales organization to inflate numbers to please their higher-ups. They’ll know how difficult it is to combine both art and science into a single number that affects million-dollar decisions. But as hard as predicting the future may be, it’s possible to make accurate forecasts using data and a disciplined process. Below, I’ve tried to share my story—including both the stumbles and successes—in hopes of helping you develop a sales forecasting technique you can rely on.

Introduction to the Deal Forecasting methodology

I remember three months into my first job in sales, my sales director from Capscan, Dave Mead, stopped me in the kitchen area. As I picked up my coffee to head back to my desk, he said, “Ryland, how much revenue are you going to book this month?” My heart racing a bit, I proceeded to reel out everything I was working on and planning to work on. He cut me off and said, “A number will do just fine.”

I ended up taking a rough guess and walked off feeling like an idiot. It felt like such a simple question and yet I struggled so much to answer it.

From that point on, I have always taken a keen interest in the art of forecasting and its importance.

Later that week I sat down with Dave and talked through how I could improve my forecasts. I wanted to be consistent in my revenue numbers and forecast with accuracy. That is where I first learned about the simple but highly skilled Deal Forecasting methodology.

The Deal Forecasting methodology is as simple as listing the deals you expect to close and tallying the revenue you expect to earn from them. It requires minimal data analysis and is more about trusting the sales qualification process than any complicated science. In a smaller organization (20 reps or less), it can be very effective because it doesn’t add much overhead or require a sales operations hire to implement.

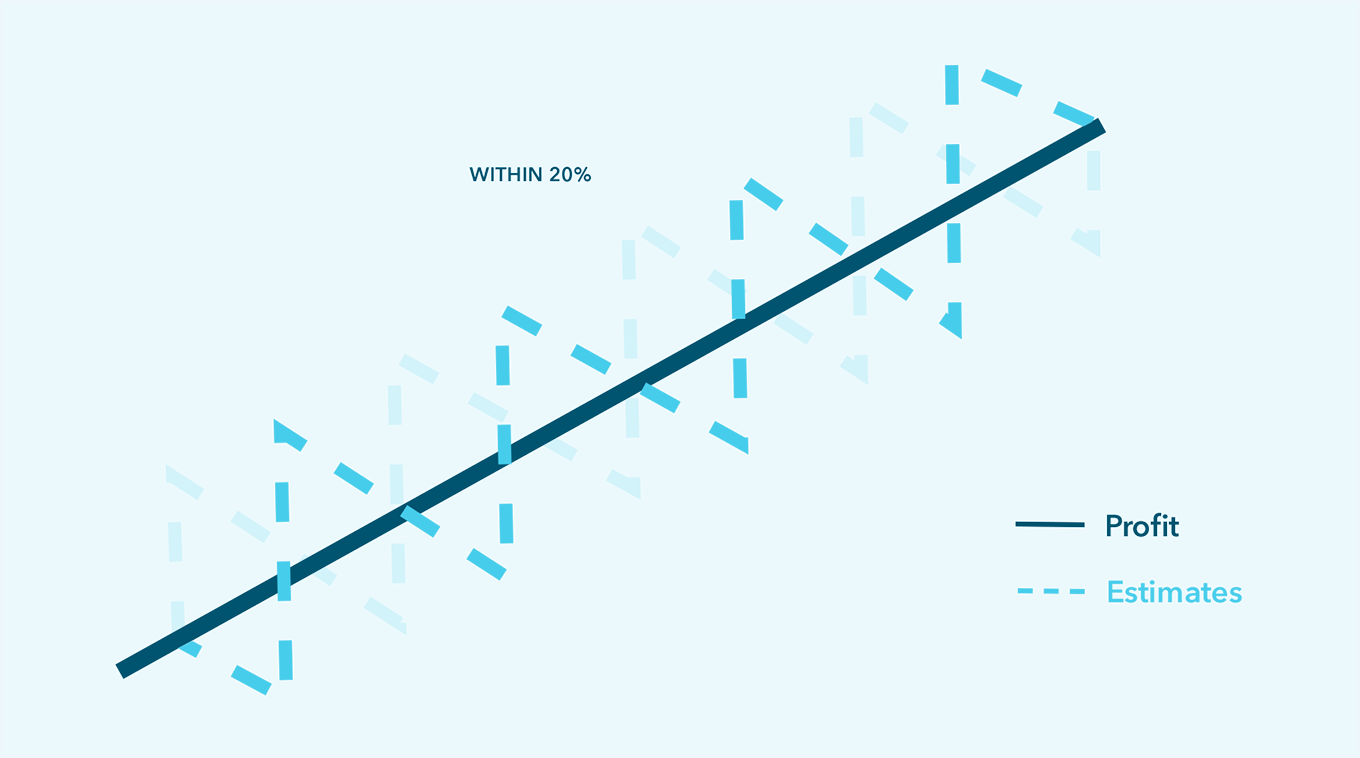

The Deal Forecasting methodology can also work well when deal volumes are low. At one of the companies I advise, IncuBus London, this model works well. They sell a B2B service to larger organizations and only a handful of sales reps, so the volume is low enough that they’ve been able to stay within the magic 20% number (the accuracy that most CFOs and boards ask for). In other words, they hit their forecasts each month.

Having a conversation with reps each week about their pipeline also helps them “pick their battles.” The sales manager sits down with each rep to review deals that have the best chance of closing that month based on product-market fit, decision-maker access, and geography. As a result, everyone is on the same page as to which opportunities they should be focusing on.

However, this model no longer works when you want to forecast beyond your average sales cycle. For example, if your sales cycle is 30 days and you are using a named deal forecast, it is impossible to forecast the following month, as the opportunities will not have even been created.

In my Capscan days, as a short-term, focused sales rep, my priority was always the last working day of the month, but as I moved into more senior roles, I realized that having a 30-day vision was not going to give me the information I needed to be successful in my job. How could I plan how many people to hire, set sales quotas, or tell the marketing team how many leads we required, let alone convince venture capitalists to invest millions of dollars into our business, if I couldn’t see further than a month ahead? Capscan went on to be acquired by GB Group for multiple millions and that decision was based on more than the next 30 days of deals, I assure you.

Deal Forecasting Method

Pros

- Provides managers with deep insights into the deals that matter

- Doesn’t require historical data

Cons

- High risk due to dependency of sales reps’ qualification diligence

- Impossible to predict beyond your average sales cycle time

Improving Sales Forecasting as Sales Cycles Grow

When I moved on from Capscan to join Huddle at the beginning of their journey, we initially used the Deal Forecasting technique. But as our sales cycle time grew and we began to close bigger deals, we quickly ran into its limitations. I learned within the first few months that this model was only accurate at predicting deals at the bottom of the pipeline.

Our first step to improvement was working with our Commercial Director, Charlie Blake-Thomas, to improve our deal forecasting through a much stronger qualification process. Charlie had a keen eye for detail and I quickly noticed a pattern that he followed in each sales meeting. Even when the most amiable of CMOs began speaking during a meeting, he had a way of cutting through the fluff and getting them to agree to exact numbers (like the leads they would deliver our sales team).

Charlie taught me in-depth sales techniques using the BANT qualification method and the CustomerCentric approach to deal management. By following a standardized process across the team, we were able to develop our first weighted forecasts, or an Opportunity Stage Forecasting technique, thus introducing me to the next evolution of sales forecasting I’d use in my career.

We used past sales data from how many leads had turned into closed-won opportunities and collaborated with the entire sales team to understand the typical steps required to close a deal. We then built a clear and concise pipeline process with a prediction on revenue we would close from each stage. Our model looked something like the chart below, which is fairly standard for a SaaS SMB/mid-market process.

Based on the example above, our reps could have up to to 10 working deals a month. At the time, we had an average order value of $10,000 and knew that our win rate from opportunities being created, where a clear need and timeframe had been expressed, was 25%. Therefore, if we ensured that reps qualified consistently to that criteria, we would be able to forecast revenue from opportunities created. The real-life maths for each rep was such: we knew that we could hire more reps to hit a $25,000 monthly target based on marketing delivering the agreed level of leads to create four qualified opportunities a month. In addition, we were then able to make informed decisions by monitoring conversion rates at each stage of the pipeline and adjusting the weights accordingly.

A couple of months after developing this forecasting method, we used it to inform a decision to invest in an inside sales team in the US. When I moved to San Francisco to help build that team with Lesley Young—one of the brightest minds in the Valley—I saw firsthand how weekly one-on-one meetings and an Excel spreadsheet could lead to accurate forecasts.

The model that we developed at Huddle was perfect early on because it enabled us to predict with relative accuracy without developing a complex predictive model. However, as we scaled, it became difficult and time intensive to update the probabilities associated with each stage. This led to decreasing forecast accuracy and increasing administrative cost. As we hired more reps, it also became difficult to enforce proper Salesforce usage. Close dates were wildly inaccurate, or missing altogether in some cases.

As we started to move up market to larger deals with longer sales cycles, the maths behind our 25% close rate was no longer standing up over a 30-day period. I learned that for all the allure that larger deals and bigger customer logos bring, they make it difficult to forecast with accuracy. In addition, we started to see a bigger proportion of our business coming from government. Larger enterprises and government organizations have far more complex buying cycles, and therefore we had a number of deals significantly above the AOV that would get stuck in our sales process. So although at a local-manager level this could be managed through deal reviews, at a more senior level, this stage of forecasting (weighted forecasting process) was causing a lot of inaccuracies for board reports.

Weighted Forecast Model

Pros

- Great for getting a process in place for forecasting when you have reps working 8–12 deals a month

- Creates focus and structure for pipeline management

- Drives accuracy in forecasting, as reps are forced to follow a process in order to progress a deal

Cons

- High admin required to constantly check that sales stage lines are accurate

- At VP or director level, the challenges of sales cycle and ancient opportunities can skew this when you are not close to every deal in the business

Category Forecasting and Moving Toward a Predictive Model

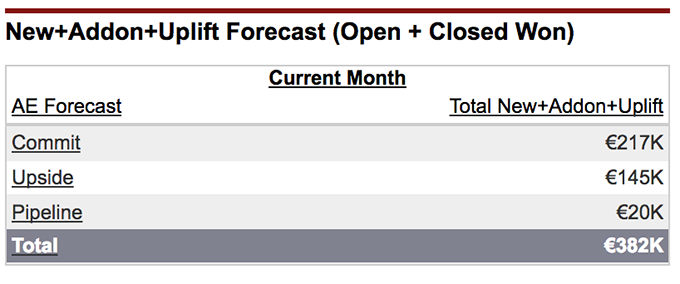

Toward the end of my time at Huddle, we implemented what is known as the “Category Forecasting technique.” This method was simple enough that we didn’t need to have a full-time sales operations hire dedicated to managing it, but it was more nuanced than the Weighted Forecast because it accounted for how a rep was feeling about a given deal. In other words, it considered the art as much as the science.

We added a new field in Salesforce, which was mandated, and all reps had to mark the status of each deal.

Commit

Verbal confirmation to sign by the close date the sales rep has marked in the CRM application

Upside

The sales opportunity has the propensity to close but not all decision makers have been engaged

Pipeline

No clear understanding of the decision-making process or understanding of nonaction (e.g., RFP process)

By overlaying this data over the weighted forecast call, we were able to see what the science was telling us (based on historical conversion rate data) and combine it with the art from the reps’ forecast category (based on how a rep was feeling about a deal).

Because reps had to follow a strict process each time they created or updated an opportunity, we found that the pipeline stages were more accurate. In addition, it helped us to spot reps who would keep deals at an early stage of the pipeline (e.g., commit and close deals from 25% to close in a day) and coach them on using pipeline stages correctly.

When I got to Peakon, where I am currently the Chief Revenue Officer, I immediately found a team of people excited to use data to improve the Category Forecasting method even more. No person was more excited about using data in the sales process than our co-founder Dan Rogers (who I also learned can drink like a rugby player).

One afternoon last year, we sat down with a six-pack of beer to determine how we could improve our forecasting before going out for our next round of funding. As a company that sells an analytics product, we knew that investors would want to see a more nuanced forecasting model. So we looked at the flow of leads to determine what makes a lead “good” and how we might automate part of the qualification process to start projecting revenue based on how many leads we had.

We quickly spotted trends based on geography, company size, and job title. Then we updated our forecast model to account for the changes. We no longer had a simple probability attached to each lead stage; instead, each lead was given a unique probability based on historical conversion data of similar leads. Rather than saying that a website signup had a 10% chance of closing, we evaluated where the lead had visited from (lead source) and what country their IP address was located in (geography) and who from the company had signed up (job title).

If a lead looked exactly like 10 others that had closed quickly, then it might get a 25% probability.

Data points that influence our forecast

- Employee’s personal win rates

- Lead score

- Opportunity age

- Vertical

- Company size

Of course, no company can perfectly predict how much revenue they will make in a given month, quarter, or fiscal year, just like no meteorologist can perfectly predict the weather. There are simply too many variables to consider. Every business is affected by both macroeconomic and microeconomic factors. Interest rates in Japan affect the price of oil in Kuwait, which in turn affects how much money your would-be perfect customer can invest in analytics software. In the age of Software-as-a-Service (SaaS), your competitors’ products can, and do, change every day. So perfection is not the goal. Instead, forecasting is about getting close enough to make informed decisions.

Yet, “as close as possible” is no exact figure either. Ultimately every business must determine how much time and resources they can devote to forecasting, as opposed to the hundreds of other activities they could be doing. And in my experience, the best approach is the same as it is in just about every other facet of sales. Start swimming. Learn as you go. Get better every day, and remember that if you don’t track it, you can’t ever measure it, let alone predict it!